Statistical Sampling Techniques in eDiscovery Workflows: Part I

With Remote the New Working Norm, What Does This Mean for the Litigation Cycle?

August 5, 2020

Statistical Sampling Techniques in eDiscovery Workflows: Part II

September 21, 2020

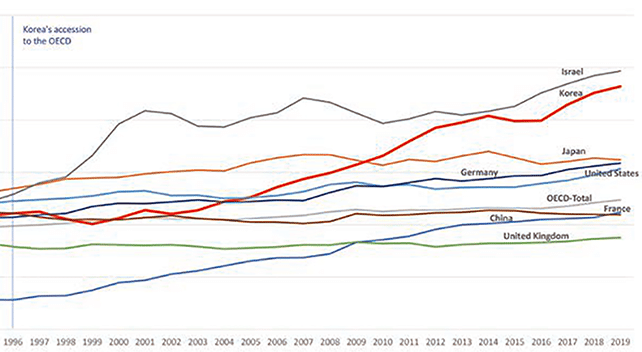

If there was ever a single eDiscovery trend that everyone could agree on, it would without a doubt be the fact that data volumes in litigation grow year to year without fail. In fact, some 2019 surveys indicated that increasing data volumes are the biggest concern among the eDiscovery practitioners today.

The size of an average collection is burgeoning, due not only to an increase in the daily volume of email, but also to the changing nature of the workspace. For example, our work-product and communications might be synced across multiple different devices or duplicated in several back-up storage locations, not to mention that, with data storage costs dropping, we are probably preserving a lot more data than before.

Corporate IT and Legal departments are quickly waking up to this reality. We have seen more and more calls in the industry for better information governance and data management, as well as closer scrutiny of outside counsel’s eDiscovery workflows. Nonetheless, a case that would have been considered “large” in terms of gigabyte volume or document count just five years ago would probably fail to raise any eyebrows today. This, combined with rising cost awareness and external legislative pressure on issues of data privacy and data security, is creating a perfect catalyst for a change in the way E-discovery is done. Clients today are looking to seize on every tool and workflow available to reduce the amount of documents they need to look at and drive down the overall discovery spend – albeit in a safe and defensible fashion.

No discussion about data reduction strategies today should go without mentioning machine learning and technology assisted review solutions available to clients – and FRONTEO’s own AI tool, KIBIT Automator has been showing incredible strength in that arena. But we can also rely on simple statistical techniques and analysis to significantly reduce the burden of discovery, increase accuracy of document review, and gain greater measure of control over the litigation process.

Statistics, at a broad level, is a science about data, and a core part of it deals with giving people the tools to make educated inferences about a large data set through observing only a small portion of that data. So it makes for a perfect partner to the field of eDiscovery, where that is precisely what we want to do most of the time. While an in-depth discussion on the merits and applications of different statistical methods, or the underlying math, is beyond the scope of this blog post, what we want to do is provide an overview of the different ways in which you can leverage one of the simplest statistical techniques – sampling – to enhance your workflows.

There are two overarching categories of sampling that you can employ in a given case. Conveniently named, they are ‘probability sampling’ and ‘non-probability sampling’. For the purposes of this article, we will focus on the most popular sampling technique from each category.

Probability Sampling – Simple Random Sampling

Simple random sampling is a process by which a subset of a population is picked in such a way that the probability of choosing each individual item is equal and random. The main advantage of this randomization is that, as long as each item within the sample had an equal chance of being drawn, the sample can be said to be representative of the population that it was taken from, and inferences can be made about the whole population based on the observations from the sample. The sample itself can be orders of magnitude smaller than the population yet still offer valid, defensible insights into the whole set.

This difference between the amount of documents sampled, and the population size that the sample can represent, allows eDiscovery practitioners to test at a relatively low cost the assumptions that can impact large document volumes.

Typical use-case scenarios for simple random sampling in an eDiscovery workflow are pretty broad:

The size of an average collection is burgeoning, due not only to an increase in the daily volume of email, but also to the changing nature of the workspace. For example, our work-product and communications might be synced across multiple different devices or duplicated in several back-up storage locations, not to mention that, with data storage costs dropping, we are probably preserving a lot more data than before.

Corporate IT and Legal departments are quickly waking up to this reality. We have seen more and more calls in the industry for better information governance and data management, as well as closer scrutiny of outside counsel’s eDiscovery workflows. Nonetheless, a case that would have been considered “large” in terms of gigabyte volume or document count just five years ago would probably fail to raise any eyebrows today. This, combined with rising cost awareness and external legislative pressure on issues of data privacy and data security, is creating a perfect catalyst for a change in the way E-discovery is done. Clients today are looking to seize on every tool and workflow available to reduce the amount of documents they need to look at and drive down the overall discovery spend – albeit in a safe and defensible fashion.

No discussion about data reduction strategies today should go without mentioning machine learning and technology assisted review solutions available to clients – and FRONTEO’s own AI tool, KIBIT Automator has been showing incredible strength in that arena. But we can also rely on simple statistical techniques and analysis to significantly reduce the burden of discovery, increase accuracy of document review, and gain greater measure of control over the litigation process.

Statistics, at a broad level, is a science about data, and a core part of it deals with giving people the tools to make educated inferences about a large data set through observing only a small portion of that data. So it makes for a perfect partner to the field of eDiscovery, where that is precisely what we want to do most of the time. While an in-depth discussion on the merits and applications of different statistical methods, or the underlying math, is beyond the scope of this blog post, what we want to do is provide an overview of the different ways in which you can leverage one of the simplest statistical techniques – sampling – to enhance your workflows.

There are two overarching categories of sampling that you can employ in a given case. Conveniently named, they are ‘probability sampling’ and ‘non-probability sampling’. For the purposes of this article, we will focus on the most popular sampling technique from each category.

Probability Sampling – Simple Random Sampling

Simple random sampling is a process by which a subset of a population is picked in such a way that the probability of choosing each individual item is equal and random. The main advantage of this randomization is that, as long as each item within the sample had an equal chance of being drawn, the sample can be said to be representative of the population that it was taken from, and inferences can be made about the whole population based on the observations from the sample. The sample itself can be orders of magnitude smaller than the population yet still offer valid, defensible insights into the whole set.

This difference between the amount of documents sampled, and the population size that the sample can represent, allows eDiscovery practitioners to test at a relatively low cost the assumptions that can impact large document volumes.

Typical use-case scenarios for simple random sampling in an eDiscovery workflow are pretty broad:

- Document culling – document volumes in an ECA environment can easily exceed millions, and introducing various criteria for removing documents (for example removing emails from certain domains, or those generated by out-of-office replies, calendar invites, and so on) can significantly impact final review volume. Statistically sampling the discarded population and evaluating the results can lend much-needed credibility and defensibility to the process.

- Search term application – this process can be quite painless if search terms are well established and agreeable to both parties. In the event they are not, however, or if certain search terms appear over-broad and bring in thousands of documents more than others, it might be worth applying simple random sampling to the outliers and testing their yield (quite simply, how many relevant documents do those terms actually bring) to give yourself some quantitative ammunition during search term negotiations. This can also be used to great effect in testing search term adjustments – for example, sampling the difference between your set of proposed search terms and the opposing side’s, even on a per-term basis, if need be, can be a powerful tool in your arsenal.

- Machine Learning / Technology Assisted Review – simple random sampling is typically used in validating the accuracy and completeness of AI decisions when it comes to utilizing Technology Assisted Review (TAR) processes. This typically happens either through measuring metrics like recall and precision via a control set, or elusion rate via an elusion test, or some combination of the two. It can also be used to generate the initial training set for the Assisted Review platform, though it is not the only way to generate the training set, and nor is it always the most efficient way of doing it. (More on this in the section on judgmental sampling in Part II of this blog post.)

- Quality Control – random sampling is probably the most popular way of conducting quality control on documents queued up for production. A lot of document review service providers utilize random sampling to generate their Quality Control (QC) sets from the population of documents tagged as responsive, privileged, confidential or any other important criteria that needs to be double-checked. Although random sampling is a tried and true process in QC, the process can add a lot of inefficiencies and costs to the QC workflow. This was one of the reasons why we developed our KIBIT Automator AI software to utilize AI-generated heat maps to prioritize groups of documents that are most in need of verification.

- Understanding your dataset – although this item is last on our list, it is by no means the least important. Understanding the data that you have collected can be critical for many reasons. Measuring prevalence of relevant documents in the document population, for example, can drive important decisions regarding usage of TAR technology, document review staffing, and litigation logistics planning. A rapid and random sample of the potential review population can give you insights into all of the above, and much more. It can also flag any potential problems with processed documents (if your vendor hasn’t caught them already), identify unexpected foreign languages and, in general, give you early insights into the type of data that your review team will be covering.